An AI turned this week’s notes into poetry.

A Chronicle of Week Twenty-One

In a week where work did reign,

Much to tell there’s little gain,

Round it though, we gently dance,

For work’s secrets shan’t have chance.

An AI turned this week’s notes into poetry.

In a week where work did reign,

Much to tell there’s little gain,

Round it though, we gently dance,

For work’s secrets shan’t have chance.

It was one of those weeks where not an awful lot happened outside of work. I don’t talk about work here but let’s sort of circle it.

One thing I can say is that I started making a presentation deck about the use of generative AI and GPT in design, initially to share my findings so far but increasingly as an exercise in structuring my thoughts into anything at all useful.

A couple of things, on reflection: an AI assistant or collaborator poses significant risks for a lazy human in certain tasks since it tempts us to quickly accept its output without evaluating potential improvements. Assuming AI can do a job to 90% of a human’s rigor and quality, figuring out what the other 10% is without having done the same work yourself is quite the challenge. So the efficiency gains may not be as significant as you think, not until we figure out some smarter processes.

An example of what I mean: you can feed ChatGPT with notes from an interview conducted with a customer about their experiences and how a product fits into their lives. Supply enough interviews, and ChatGPT can do the work of summarizing them in aggregate, presenting key themes and areas worth looking into, like maybe everyone thinks the price is too high, but it’s because they don’t fully understand the value of what they’re buying.

It can create a bunch of frameworks to illustrate these findings, like personas and service blueprints. And it can even suggest solutions, like better marketing materials to explain this value to customers. The AI’s output might look pretty good, similar to what a team of human designers would (more slowly) produce, and a company might be tempted to make business decisions based on it. In fact, a team of human designers who haven’t read the interview notes themselves or thought deeply about it might also look at the AI’s work and say it’s good to go.

The level of confidence and finality inherent in these AI outputs is incredibly convincing. But if a human were to go through all the interviews, listening to the recordings perhaps, they might realize there was a missing element, a feeling subtly unsaid on the margins, that means some customers do see the extra quality, they just wish there was a cheaper version of the product that did less. Skimming through the finished research report crafted by the AI, you wouldn’t even begin to guess where in the sea of correct conclusions this exception could be hiding.

But there’s no question that this stuff is ready today to do some tasks like image editing, seen in Photoshop’s impressive beta release of a “Generative Fill” feature this week. I took a stock photo and doubled its height, and it was able to get the context of the scene and fill in the missing ceiling almost perfectly. That would have taken an experienced image compositor at least a few minutes, and anyone else way too much time. Just a couple of clicks now.

I also looked into what Adobe is building with its Sensei suite of AI marketing tools, and that dream of generating and sending personalized ads, as in a unique package of art and copy tailored to a single customer’s behavior, would seem to be already here. I’m not 100% sure how this works everywhere, but in the past, you’d still need copywriters and art people involved in the process after marketers had identified the “customer journeys” and content-goes-here templates. With the opportunities now being identified, advertising messages crafted, and email offers all sent with a single click by the same person, there’s hardly a crack in the door left for the traditional artists and copywriters to make their case. Yet, the quality is good enough to satisfy all but the most discerning of corporations.

You may observe that the two of the largest advertising platforms are already in this space.

What do you think about the current advancements in AI and their implications? Share your thoughts in the comments below.

(One more example: I asked ChatGPT to help suggest edits for this post, and it rewrote one of the above sentences to be better. I liked it, but on closer inspection, there was a glaring semantic error I had to fix myself. It also suggested the call to action above, to increase engagement. Talk to me!)

===

There seems to be yet another wave of Covid sweeping through the city, based on the fact that several people I know have come down with it, and every bus and train car I’ve been on this week had more people wearing masks, suggesting that they, too, know people who’ve come down with it.

Kim is going away for a couple of weeks, and I’m hoping she doesn’t run into it out there either; one of her colleagues caught it while traveling in the region a few days ago. I’m planning to stay home as much as I can during this time, and finishing as many video games as possible.

===

I asked ChatGPT to analyze my post and it suggested I add a conclusion. I said “you do it, then”. Here’s what we agreed on:

This week has been a whirlwind of diverse experiences and discoveries. From immersing myself in the captivating sounds of Random Access Memories in Dolby Atmos to exploring the world of Korean soft power through Jinny’s Kitchen, I’ve been on a journey of curiosity and appreciation.

The Anbernic RG35XX has provided me with nostalgic gaming moments, while progressing in Breath of the Wild has been both thrilling and daunting. Even a simple nail painting team event at work has reminded me of the freedom to embrace self-expression.

These experiences have sparked my curiosity and inspired me to share my thoughts as a writer. Life is full of opportunities to explore, connect, and find inspiration in unexpected places. So, whether it’s through music, cuisine, gaming, or the little joys, embrace the unknown and discover the stories and experiences that await.

Thank you for joining me on this adventure, and until next time!

Perhaps you’ve heard of what’s been going on in generative AI and thought, “I have no need to generate any pictures or professional email filler”, and dismissed the subject. Perhaps, like me, you’ve read that GPT-4 can now “pass exams” from the SATS to legal bar exams, accounting ones, and so on, without really knowing what that means. It can choose the right answer out of a list, and write little structured essays on given topics? That’s amazing, but not unexpected.

But I’m here to tell you that I asked ChatGPT (GPT-4) a question yesterday, quite an exam question, and its answer floored me. These things are called generative AI, but that name undersells the incredible interpretive power they possess when it comes to language and writing comprehension.

I asked it to analyze this poem by Gabrielle Calvocoressi, one of the most devastating things I’ve read in the past year: Miss you. Would like to grab that chilled tofu we love. Go read it, I’ll wait. Get some tissues.

I asked it to explain what the poem was about, and call out what it’s doing as a piece of writing to achieve its emotional impact. I’m not aware that any similar analysis of this poem exists online, if you’re wondering. My thoughts going into this: I know these AIs can write poetry, but I haven’t seen anything great. They must understand unstructured or unusually structured language to some degree, but can it “read” poetry from a human point of view? Probably not? Will it really understand what this is implying: the loss, the empty spaces, the long journey, the light body? And now I realize how dumb I was, because clearly it’s “read” thousands of books of and about poetry, and literary criticism, and style, and should know a lot more than the average person.

Here’s its answer, unedited. Good enough to pass any high-school literature class, if not any blind test of humanity.

===

This is a deeply emotional poem that appears to be exploring themes of grief, loss, longing, memory, and the power of shared experiences. It is presented as a monologue, presumably from the perspective of the speaker who is missing someone who is now absent. It’s filled with a sense of yearning, a desire for the return of the past and the presence of someone who is deeply missed.

The poem starts off with the speaker expressing their indifference to the physical state of the missing person, suggesting that they would be happy even if the person could only present in their ‘light body’. This term could have different interpretations but it seems to suggest a spiritual or ethereal form, possibly indicating that the person is deceased or in a state where they cannot physically be present.

The middle of the poem is filled with reminiscences of shared experiences. The speaker misses the shared rituals and discussions about mundane things such as the type of chilis used in a dish of tofu. This highlights the value of seemingly trivial shared moments in relationships and their ability to evoke strong emotions when remembered. The speaker also mentions watching the person on screens and wishing they could get out of the hospital, which suggests a period of illness or suffering that the person had to endure.

Towards the end, the speaker talks about being willing to order their shared favorite dish and eat it alone, even though it seems painful for them. This can be seen as an attempt to honor their shared memories and perhaps a step towards acceptance of the loss.

The speaker also acknowledges not knowing the journey the absent person might be on, whether it’s long or not, and expresses a wish to know more about their current state – what they’re reading, if they’re reading, which suggests a longing for a continuation of their shared intellectual and emotional connection.

Throughout the poem, the simple language and the direct, honest sentiment adds to its emotional power. The repetition of “Miss you” and “Wish you could” emphasizes the sense of longing and loss. The specific details of shared experiences and the mundanity of their shared conversations humanize the relationship, making the emotions more relatable and poignant.

Overall, the poem is a moving exploration of love, loss, and the power of shared experiences. It speaks to the human condition of missing loved ones, the difficulty of letting go, and the beautiful yet painful memories that bind us to the past.

I was in a cab listening to music on my AirPods, and just as we were pulling up, I switched to Transparency Mode and heard a song playing over the car’s radio that sounded kinda familiar. I knew it was a remix of some tune I wanted to know, and managed to Shazam it before getting out.

Looking into it later, I realized the melody was what I’d been trying to figure out about Charli XCX’s White Mercedes for over a year. Why does that one line she sings — literally the line “like a white Mercedes” — sound like some other song I can’t name? It turns out, it’s literally a song I would have absorbed from the world around me but never intentionally listened to: One Direction’s Night Changes from 2014. Ahhh it’s so good to have that itch scratched! And there are so many more like this I’ve yet to solve.

Let me say it again for the search engines: Charli XCX’s White Mercedes sounds like, samples, or contains an interpolation from One Direction’s Night Changes.

Another similar thing happened as I was playing WarioWare Inc. (GBA, 2003) again for the first time in years. The background music in one stage awoke some long dormant memory and I needed to know what pop song from my younger days it sounded like. After a lot of humming aloud and trying to Shazam it and searching online… I concluded that the song it reminded me of was… itself. It’s called Drifting Away, and I must have really loved it back when I was playing the game for the first time.

Speaking of retro games, I lasted a full week. The Anbernic RG35XX I said I wouldn’t buy since I already have a Retroid Pocket Flip is now on the way to me from China. There are some reports of shoddy QA and long-term durability, but for S$90 I think that’s to be expected.

===

Another week, another bunch of water-cooler conversations about AI. Specifically how it relates to our work in design: as accelerator, collaborator, ambiguous combatant, amoral replacement. I don’t just mean the making pictures and writing words part, but analyzing messy human interactions (it’s just unstructured data) and presenting them in new ways.

I ran one experiment with ChatGPT on Sunday afternoon, just for kicks, and it sort of blew my mind. From a handful of behavioral traits and demographic details I supplied, it was able to inhabit a fictional personality that I could speak to and pitch various products to. So far so par for the course. But then it reacted to a hypothetical KFC offering called “The Colonel’s Colossal Combo” in a way I didn’t expect, citing a conflict with values and dietary preferences that I did not specify. When asked where they came from, it argued that although they were not specified, they could be reasonably expected from the “Frank” persona I’d created, because of some other background that I DID provide. It sounded a lot like intelligent reasoning to me, and regardless of how it works, I was happy to accept the inference the same as if a colleague were making it.

Like with all advances in automation, it’s inevitable that we’ll now be able to (have to) do more in less time, with fewer people. Until things go “too far” and need to be reined in, it’s not even a question of whether we should — every industry is incentivized to discover when can be done before it gets done to them. I think there are some exciting opportunities for designers, and a slew of unknown consequences for society. And just like that, we’re back in a new “fuck around” phase of the tech cycle.

===

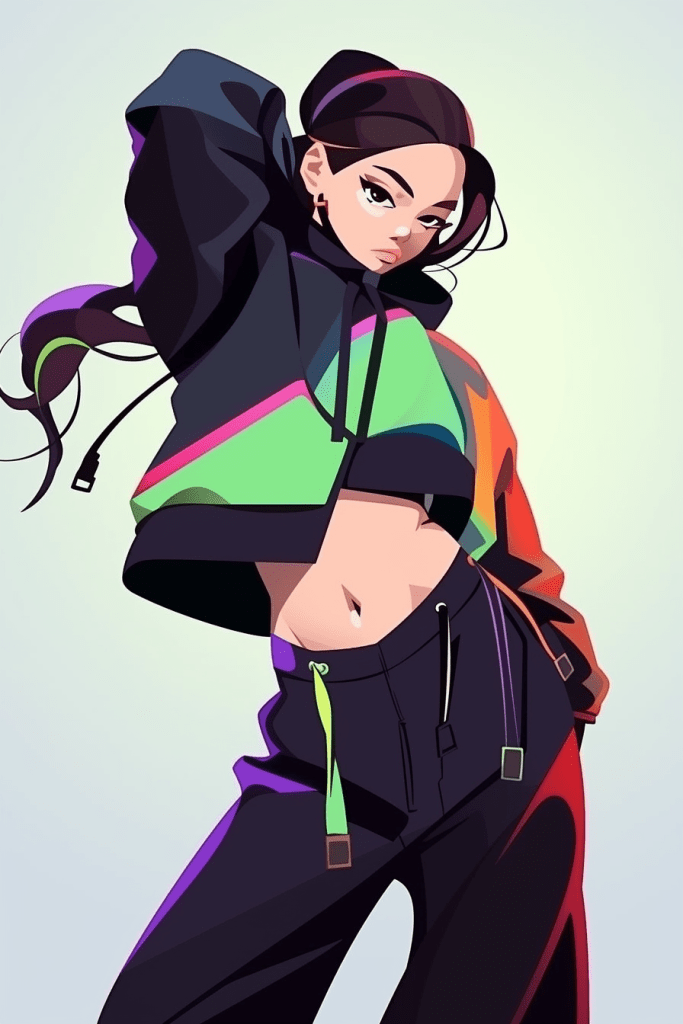

A couple of weeks ago I made a bunch of fashion-style athleisure photos with Midjourney v5 but somehow forgot to post them. The photorealistic ones are quite incredible, and the few illustrations I got were really strong too.

This week, v5.1 dropped, promising more opinionated outputs and sharper details, so I tried the same prompt again. Many of the results were as broken as these bodies.

They probably fixed something quietly because it’s been more reliable in the days since. I thought it would be interesting to do a comparison of models 1 through 5.1 with the same prompt. It’s crazy how far it’s come in just over a year.

photograph of Queen Elizabeth II in a dim video arcade, sitting at a street fighter 2 arcade cabinet, intense concentration playing game, side view, screen glow reflected on her face, atmospheric dramatic lighting --ar 3:2

If you saw Midjourney a year ago, you were probably impressed by how it and Dall-E 2 could turn quite natural text descriptions into imagery, even if the results were still quite hallucinatory, like DeepDream’s outputs circa 2015. I don’t think you would have expected to see the pace of improvement be this quick.

It’s not just rendering improvements from distorted pastiches to photorealistic scenes with internal logic (global light affecting nearby objects realistically, fabrics folding, leather seat covers stretching under buttocks), but also how it’s evolved through feedback and training to understand intent: the idea of a “side view” started working from v4. None of the earlier re-generations got me the camera angle I was going for. The tools that promise to do this for video are probably going to get good faster than you expect.