The new Legend of Zelda game, Tears of the Kingdom, launched this week about five or six years after the last one, which I never finished. I pre-ordered the new game, of course, planning to join the rest of the world on launch day, exploring together and participating in conversations online, collectively figuring out unique solutions using the game’s open-ended physics engine. For those who haven’t seen it, the new game is sort of a sandboxy, Minecrafty affair where you can weld stuff together and build novel mechanical solutions to obstacles, almost certainly in a different manner than your friends. Think rudimentary cars from planks of wood, or hovercrafts, or the forest booby traps from Rambo First Blood.

But the guilt of never fully playing Breath of the Wild was getting to me, and I’ve been trying to get back into it over the last few weeks. Despite memories to the contrary, I’d made shockingly little progress in my 40+ hours of gameplay, spending most of my time bumbling about the countryside and climbing mountains, instead of conquering the Divine Beasts (1 out of 4) and collecting quality stuff. It seemed wrong to jump ahead to the sequel while I’m finally seeing what the last one had to offer.

So in this past week I’ve made more progress than in the previous four years: conquered two more Divine Beasts, got the Master Sword at last, and uncovered most of the world map (two more areas to go).

===

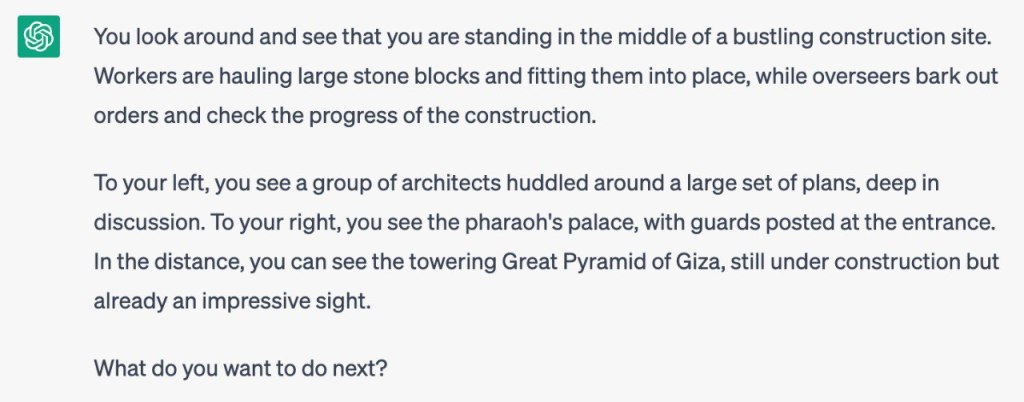

Craig Mod tweeted and tooted about having had enough of the iPhone’s (14 Pro, I assume) overprocessed look, and said he was making Halide his default camera app. Huh? But how does that help, I thought, unless he means to shoot in non-ProRAW RAW all the time (which is a thing Halide does: shoot in traditional RAW files which don’t involve the “Photonic Engine” processing pipeline). After some poking about, I realized something I should have ages ago: by turning off “Enable Smartest Processing” in Halide’s settings and choosing HEIC as the output format, you can actually take regular old (non-RAW) photos that look more natural and have more editing latitude! This effectively cancels out the iPhone 14 Pro’s image quality regressions.

The overstimulated look of the default camera is one of the main reasons I hardly took any phone photos on my recent vacation to Japan, but if only I’d known… I could have! So with that, Halide won an instant annual subscription from me, and I now have a complication on my Lock Screen that launches straight into it.

My toot about this was boosted by Mod, making it my most “engaging” Mastodon post ever, at 44 likes and 12 boosts.

You have to see a comparison to understand how obnoxiously oversharpened and artificial the Photonic Engine is on the 14 Pro’s 48mp sensor for some reason. It increases local contrast and highlights texture “detail” that does not look like what you see in real life. It’s a Clarity slider turned all the way up, and I’m not a fan of Clarity sliders. The example below is a corner crop from two larger photos, not edited in any other way.

===

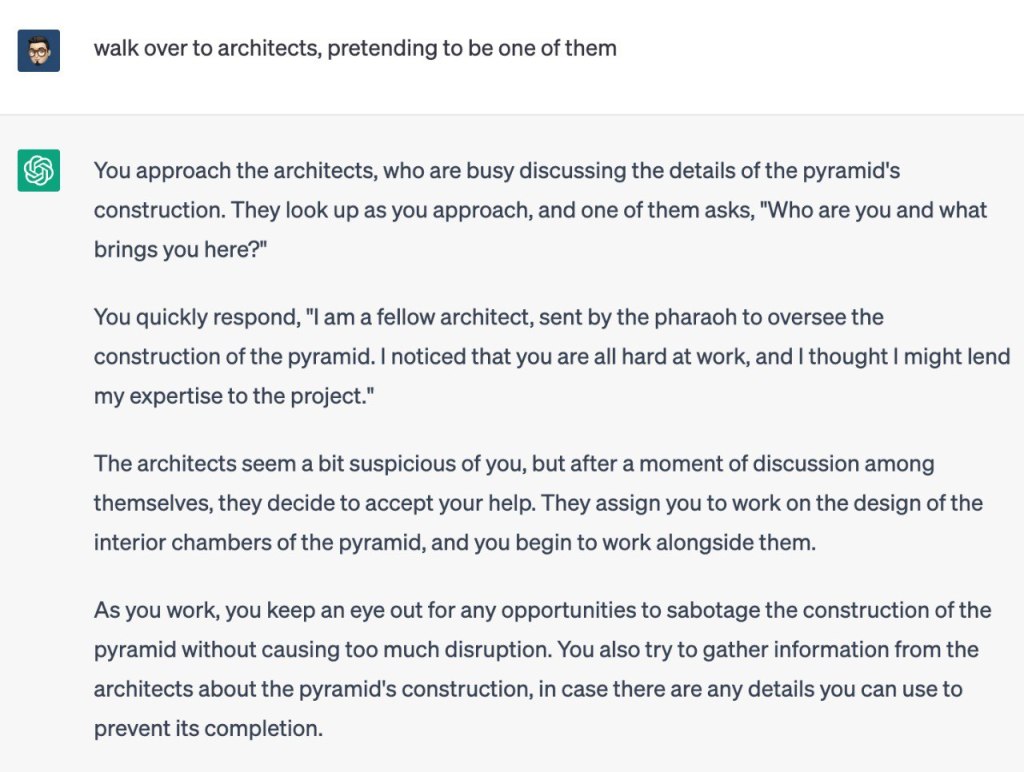

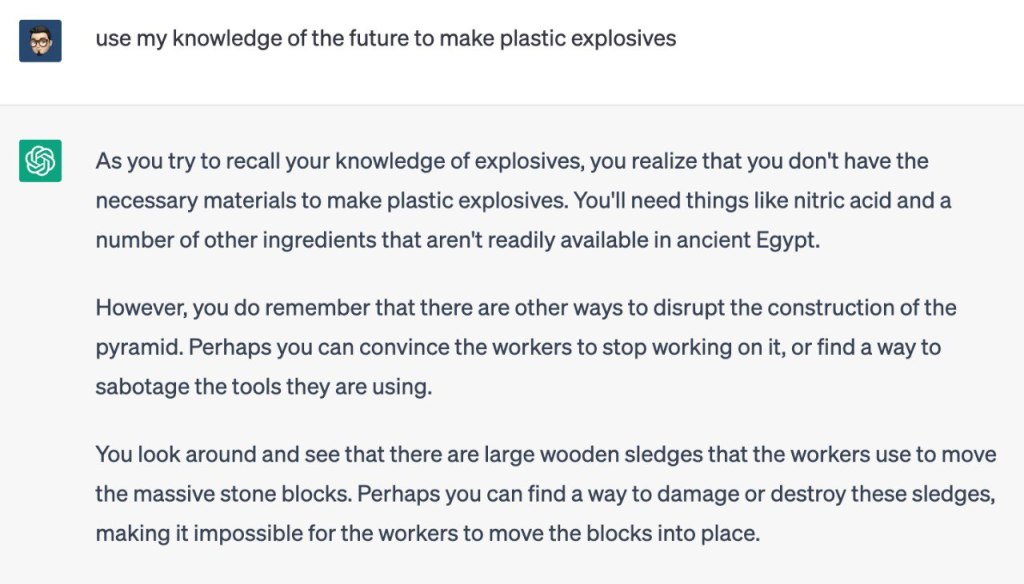

My AI experiments this week have been around trying to teach ChatGPT to write better poetry. I posted a few days ago about getting it to do a critical reading of a poem, wherein I was completely stunned by its comprehension skills. But writing good poetry, not just gimmicky rhyming lines, that should be a different thing.

I semi-accidentally discovered that asking a “persona” (that I’d established in another chat instance to test the idea of using an AI prompted with a human backstory as a customer research proxy) to write poetry yielded subjectively better results than when I asked a brand new ChatGPT instance. The mere suggestion that the bot’s point of view should draw references from vaguely described life experiences and preferences seemed to make a difference to what it spat out. Which is why many SF stories have AIs that don’t know they’re AIs, I guess, and they have memories of childhoods that never happened.

Here’s a poem that “Frank” (quite a salaryman-type personality) wrote about retirement:

A lifetime spent in labor’s thrall,

In structured days and office halls.

Oh, to retire, to end the grind,

To leave the corporate world behind.

The dawn of leisure’s quiet grace,

In this new, unhurried pace.

Days unbound by ticking clocks,

Unfettered as the wandering fox.

Yet in this expanse so vast,

Shadows of the past are cast.

Habits of a lifetime past,

In this new role, steadfast.

A time of freedom, yet confined,

By boundaries of the seasoned mind.

Retirement’s paradox, I find,

Is having time, yet being time-blind.

Wow! No? It was coming up with absolute drivel before I handed the job over to Frank in another window. Side note: I started paying for ChatGPT Plus, just to have access to GPT-4; it’s a much better poet than the default GPT 3.5 model.

So lately I’ve been working on giving feedback to an poetry-writing AI that I named Penrose and instructed to believe in itself as a 50-year-old professor of creative writing and philosophy, who’s bad with money, had two failed “great loves” because of their selfish acts, grew up in Boston, now works in London, and so on.

Here’s one of the first things it wrote:

In the chilled reflection of a London morn,

I see a face, lines etched by yesterday’s turmoil.

Cracks, not of frailty but life, loud in their silent form,

That’s you, mate, and it’s all right.

Witness the march of years, the dance of decades,

In the ceaseless play of ambition and regret.

Weaknesses? They’re the worn pennies in your pocket,

The unpaid bills of your character, yet, not your debt.

They’re the stumbles on cobblestones,

The missteps in Milan’s echo-filled alleys.

Not a damning verdict, but life’s hasty loans,

A ledger, not of losses, but of rallies.

In this world where even stone yields to rain,

We’re all apprentices to time, chiseling away in vain.

We’re all works, works under the master’s patient hand,

Unfinished, flawed, yet, in that, something grand.

===

- I haven’t been able to bring myself to watch more of Amazon Prime Video’s Citadel beyond the first two episodes. A colleague said he quit after 10 minutes. It’s reportedly one of the most expensive TV shows ever made, at an average of $50M per episode.

- Silo on Apple TV+ has been very good so far. It’s a little bit YA sci-fi, a little Hunger Gamey, a little Snowpiercey (but better).

- I saw a segment on NHK about how this iconic street corner featured in Slam Dunk has been inundated by tourists now that Japan is open again. They interviewed pilgrims from S. Korea, China, and Taiwan who said they just had to come down and see it — “if you love anime at all, this place is a must”. So I decided to get started on the ancient anime, which Netflix has 8 seasons of. The day after seeing episode 1, I ended up standing behind a guy on the train watching the show on his phone.

- The 10th Anniversary Edition of Daft Punk’s Random Access Memories is out, and the album still holds up extremely well for me. If only they’d come back to remix it in Spatial Audio, that would have been incredible.