It was Hari Raya Puasa here on Wednesday, which, along with the city’s oppressively hot and humid weather, left those of us who don’t celebrate the holiday feeling somewhat unsatisfied upon returning to work on Thursday. More than one person slipped and called it a Monday, or asked how the weekend was. So instead of a four-day workweek, it felt like two weeks in one.

Perhaps the depressed mood was justified. Earlier in the week, tragedy struck a colleague who lost their father to a heart attack — a feeling all too familiar within our team as the same thing happened to another young designer just over a year ago. And you may recall just 9 weeks ago, another friend lost their dad too. At the same time, my thoughts have been occupied by a family friend, virtually family, currently recovering from surgery with an as-yet-unquantified cancer running loose in her body.

I’m tired, but feeling better about the recent decision to make room for more important things than my current work. I came across this poem about mortality that captures the suddenness of loss and how we take everything for granted: If You Knew, by Ellen Bass. I was also reminded of this Zen concept that a glass always exists in two states, whole and broken, while reading responses to a tweet asking for “sentences that will change your life immediately upon reading”.

Hitting the books

Speaking of reading, I picked up Isle McElroy’s People Collide again after months of sipping its beautiful phrases through a tiny time straw, finishing it quickly. It’s the best thing I’ve read in many months; a profound questioning of what it means to be a particular person in a specific body, and how much of you makes up who you are to everyone else. At its core it’s a Freaky Friday body swap story. I don’t know if it’s because McElroy is trans that these perspectives and insights are so tangible, but I felt them. Even though the story didn’t go where I wanted at all, I gave it five stars on Goodreads because the final page is a triumph. I had to fight back tears of admiration while reading it on the bus.

Right after that, the book train was rolling again and I read After Steve: How Apple Became a Trillion-Dollar Company and Lost Its Soul by Tripp Mickle, which had some inside stories and gossip I’d not heard before, and an interest in how Jony Ive “neglected” his design leadership role in the later years, a story I’ve been interested in hearing. Still, it’s one of those non-fiction narratives that dramatizes and assumes a lot about what its subjects did and felt at key moments, things nobody can know for certain.

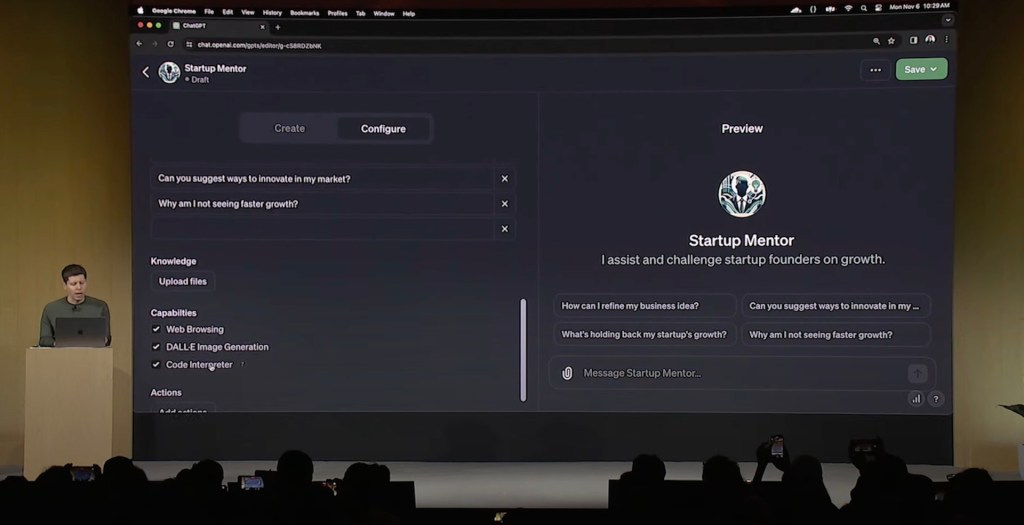

Here it comes, the AI part

Meanwhile, the Apple Design Team alums who decamped to Humane launched their first product, the “Ai Pin”, to largely middling reviews from tech outlets like The Verge. Quick recap: this is a camera-equipped, voice-enabled wearable you attach to your clothing, letting you access a generative AI assistant so you can ask general questions and take various actions without getting your phone out. In theory.

Most of its faults seem to stem from issues intrinsic to OpenAI’s GPT models and online services, on which the Pin is completely dependent. It’s a bit tragic for Humane’s clearly talented startup team. I’m inclined to see the hardware as beautiful and an engineering accomplishment, and what parts of the user experience they could customize with the laser projector and prompt design are probably pretty good, but it doesn’t change the fact that the Pin’s brains are borrowed. A company with financial independence and the ability to make its own hardware, software, and AI services would have a better chance. Hmm… is there anyone like that?

Meanwhile, a new AI music generation tool called Udio launched in public beta this week and I spent some time with it. I’ve only played with AI models that do text, images, and video, but never audio. It’s currently free while in beta and lets you make a generous amount of samples, so there’s no reason not to take a look.

Basically you describe the song you want with a text prompt, and it spits out a 33-second clip. From there, you can remix or extend the clip by adding more 33-second chunks. It generates everything from the melodies to the lyrics (you can provide some if you want), including all instruments and voices you hear. Is it any good? It’s very impressive, although not every song is a banger yet. Listening to hip-hop instrumentals featured on the home page, I thought to ask for a couple of conscious rap songs and they came out well, with convincing sounding vocals. I then asked it to write a jazzy number about blogging on a weekly basis and you can judge for yourself if the future is here.

At present, I see this as a fun toy for the not-so-musically inclined like myself, and as an inspiration faucet for amateur songwriters who work faster with a starting point. So, pretty much like what ChatGPT is for everything else. And like ChatGPT, I can see a future where this threatens human livelihoods by being good enough, at the very least disrupting the background music industry.

Comfort sounds

One musical suite that stands as a symbol of human ingenuity’s irreplaceability, though, is what I’ve been playing in the background on my HomePods all week while reading and writing: the soundtrack to Animal Crossing New Horizons. Because Nintendo hasn’t made the official tracks available for streaming, I’ve been playing this fantastic album of jazz piano covers by Shin Giwon Piano on Apple Music. It takes me right back to those quiet, cozy house-bound days of the pandemic. Could an AI ever take the place of composers like Kazumi Totaka? I remain hopeful that they won’t.

Maggie Rogers released her third album, Don’t Forget Me. I put it on for a walk around the neighborhood on Saturday evening and found it’s the kind of country-inflected folk rock album I tend to love. One song in particular, If Now Was Then, triggered my musical pattern recognition and I realized a significant bit sounds very much like the part in Counting Crows’ Sullivan Street where Adam Duritz goes “I’m almost drowning in her sea”. It’s a lovely bit of borrowing that I enjoyed; putting copyright aside, experiencing a nostalgic callback to another song inside a new song is always cool. It’s one of the best things about hip-hop! But why is it okay when a human does it but not when it’s generative AI? I guess we’re back to Buddhism: Everything hangs on intention.

===

Miscellanea

- I watched more Jujutsu Kaisen despite not being really blown away by it. Mostly I’ve been keen to see the full scene of a clip I saw posted on Twitter, where the fight animation looked more kinetic and inventive than you’d normally expect. I decided that it must have come from Jujutsu Kaisen 0: The Movie, because movies have bigger budgets and the animation in season 1 looked nothing like it. And I had to finish season 1 in order to watch and understand the movie.

- Well, I saw the movie and it was alright, but it didn’t have that fight scene. So where is it?? That got me watching more episodes of the TV anime, and I don’t think I’ve ever seen a jump in quality like this between two seasons of a show. It seems a new director came on board (maybe more money too), and suddenly the art is cleaner, the camera angles are more striking and unconventional, and everything else went up a notch. I guess I’m watching another 20+ episodes of this then.

- I finished Netflix’s eight-episode adaptation of Three Body Problem. I’m not invested enough to say I’d definitely watch a second season, assuming they pick it up at all.

- On that topic, Utada Hikaru released a greatest hits compilation called Science Fiction, with three “new” songs, and 23 other classics either re-recorded, remixed, and/or remastered in Dolby Atmos. I don’t really know these songs in that I have no idea what many are actually about, but I’ve heard them so much over the last 25 years, I probably know them more deeply than most.