It was Chinese New Year week and it’s not been the same for several years, partly because of Covid and because many key relations have simply gotten old. My uncles and aunties, for decades only known to me by honorary titles describing their places in the family hierarchy, are getting ill, weak, and unwilling to leave their homes or receive visitors. Several years ago I started to ask what their actual names were, because I grew up literally calling them names that translated to second son of third uncle, or eldest paternal uncle; I’ve often wondered why Chinese culture has mechanisms that foster emotional distance between children and adults, a tradition that feels increasingly out of touch with today’s world. But that’s how it goes.

I remember the typical CNY reunion dinner for many years as something to get through with gritted teeth and withheld snarky jokes, and if you’d told me then that I would look back now on those as the good times, I would have despaired. Now they appear as charmingly awkward and well-meaning attempts to bond, by people I might never get to wish a happy new year to again. I always thought the idea of an annual reunion made more sense against the backdrop of a vast country like China, but now that it’s hard to connect for all sorts of reasons even across our tiny one, I see the real terrain is time and memory, and so many relationships die starving in those fields. That’s how it goes too.

===

One of our friends lost his dad this week. He was by all accounts the kind of guy you call a ‘real character’. He went from a corporate career to a becoming writer in retirement, putting out three books in the last decade or so. Because we didn’t have any reunion dinner commitments at the usual time, we were able to attend his funeral wake and share in some lovely stories from the family. They managed the joke that this terrible timing right in the middle of festivities was the last prank he’d play on them. I learnt he had a regular blog, which he kept going even after suffering health setbacks — that’s dedication. Every week I wonder what I’m going to say here and always think the streak’s about to end.

Even the funeral was remarkable for the fact that he planned it all himself, leaving behind detailed instructions on what he wanted, to the point of getting his own headshot taken and sealed in an envelope which his family only opened after he was gone, so they wouldn’t have to fuss over this stuff at the worst time — that’s love. It got me thinking that everyone should prepare their own playlists and slideshows too. I might get started on it this year. Don’t be surprised if you come to my funeral and hear that Chinese AI dub of Van Morrison on repeat.

===

Other activity:

- I’ve started on a new book and only read two short chapters but I already know this is something special. It’s People Collide by Isle McElroy.

- We are back watching Below Deck and I’m still sure that this is one of the best management trainings you can get for your time. Every single season is full of unnecessary crew drama because people don’t communicate expectations, don’t provide clear feedback, and allow emotional reactions to escalate. I get that it’s not easy, and I’m not sure I do it so well myself, but the lines between action and consequence are so clear here; they’re literally edited together for entertainment. Another lesson: everyone is flawed. The people you root for because they’re usually sensible? Sometimes they fuck up! Working relationships are rarely black and white.

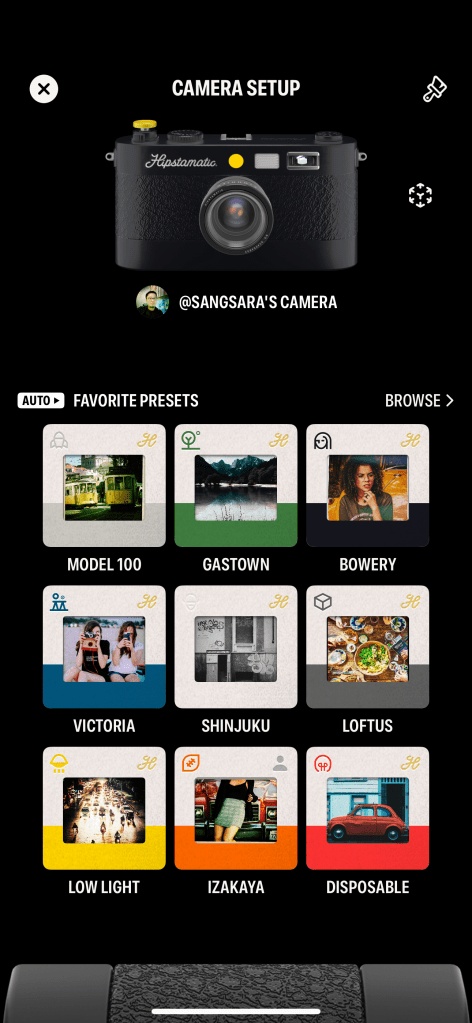

- Kanye West finally released Vultures two months its initial release date, with a new cover and possibly a different tracklist. I haven’t heard it yet. But once again, Apple Music has not recommended me music I’m interested in, although I’m quasi-certain it will be featured somewhere in the app in the next few days. Right now the Singapore ‘Browse’ page is full of Chinese New Year related music that I definitely do not care about.

- After working too hard and not getting enough rest, Kim’s sort of fallen ill now, with me feeling a milder version of it. The timing could not be worse: we’re off on holiday soon, the kind where sneezing and aching and feeling weak might derail a complex itinerary.

- Speaking of which, I used ChatGPT to help plan this vacation, and I’ve taken those instructions and made a custom GPT called AI-tinerary which might help you if you’re going someplace new and want to create a multi-day schedule of things to do. It can work off your individual interests and transport modes, as well as answer other travel-related questions you may have. At some point I’ll be able to make it plot your route on a map (if you ask it now, it’ll generate some wacky DALL•E map drawing that you should absolutely NOT follow).

- You should know by next weekend whether these AI-generated plans worked out, or if we tried to stay in towns that don’t actually exist.